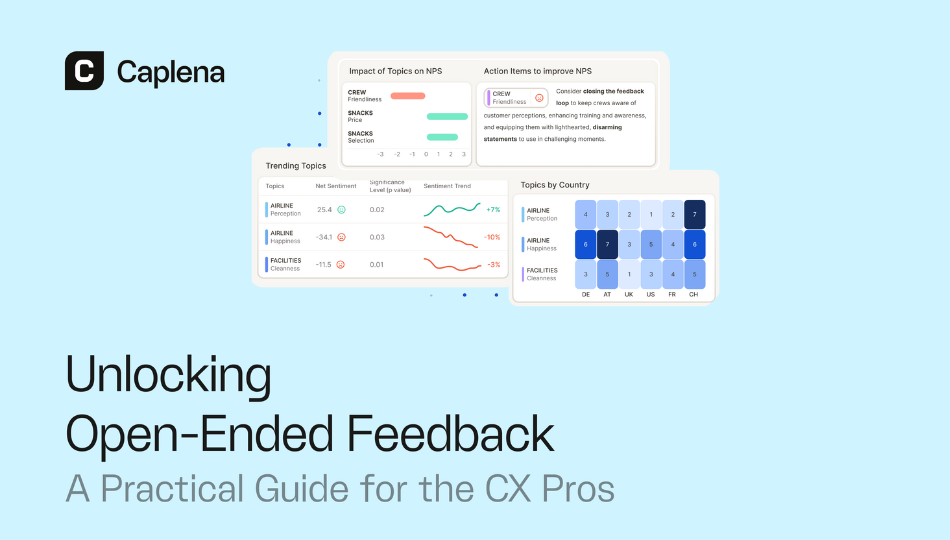

Unlocking Open-Ended Feedback: A Practical Guide for CX Pros

Editor’s note: In the lead-up to MRMW EU 2025, we are spotlighting thought leadership from our partners and sponsors to share the latest perspectives shaping the insights industry. In this feature, our partner Caplena explores how open-ended feedback is being transformed by AI, helping CX pros analyze unstructured responses efficiently and turn them into meaningful insights.

Open-ended responses are a gold mine of insights, but are often buried in mountains of raw, multilingual text. Sorting through thousands of comments is a massive drain on time and resources.

AI is making this more practical. Not the hype of “AI doing everything,” but real tools that surface what matters while keeping humans in control.

This guide shows how to combine AI with reliable research practices to turn unstructured feedback into meaningful insight.

The Value of Asking Open-Ended Questions:

Both open and closed questions have value, but they solve different problems:

- Open-ended questions capture what you didn’t think to ask. They are harder to analyze but reveal what customers care about, not just what you assume is important.

- Closed-ended questions deliver structured data that is easy to quantify. Perfect for testing predefined ideas.

The smartest approach is to use both. For example, start with a rating question, then follow up with “Why?” It’s simple, but it works.

What Researchers Are Doing in Practice

In Caplena’s survey of 50 research professionals, nearly half reported using open-ended questions in almost every study. Only 3% avoided them entirely. Two-thirds said they plan to use more open-ends in the next few years.

As one participant put it: “Open-ended questions are the best way for us to uncover things a customer didn’t know they didn’t know.”

👉 See how teams at DHL, Lufthansa, and Kia Europe are scaling open-end analysis in real-world research projects

Why Do Teams Avoid Asking Open-Ended Questions?

Three big barriers come up repeatedly: time, effort, and cost. Then there are the usual challenges like survey fatigue and skipped questions.

According to a researcher we surveyed “We have to pay for third-party coding any time we include them or do the manual work of identifying themes. Time and money hold us back.”

Another added: “I think with emerging technology, we’ll see more open-ends. They’re valuable, but they’ve been a nightmare to code and analyze.”

That last point is important. The technology is finally catching up to make this more manageable.

Four Principles to Write Better Open-Ended Questions

1. Don’t Overdo It

Analysis of over 100,000 projects showed empty response rates spiking past 75% when surveys included six or more open-ended questions. A solid guideline is to limit yourself to three open-ended questions, plus up to six rating or multiple-choice items.

Position open-ends as follow-ups, especially after rating questions. People will often explain why they gave a “3 out of 5” if you simply ask.

2. Make Them Concrete

Compare these two questions:

- “What do you like or dislike about flying with us?”

- “How was your experience on your last flight?”

The first yields vague, generic responses. The second prompts specific memories. The difference in quality is dramatic.

3. Don’t Split Likes and Dislikes

Separate questions for positives and negatives waste respondents’ patience. Respondents often confuse the fields, so you end up with complaints in the “liked” box and praise in the “disliked” one.

4. Be Intentional About Anonymity

Only ask for named responses if you plan to follow up. For sensitive topics, especially employee feedback, anonymity is essential for honest answers.

Three Main Approaches to Handling Open-Ended Data

- Manual Coding

Traditional coding with spreadsheets and codebooks delivers precision but does not scale. It requires significant person-hours even for small datasets.

- Rule-Based Systems

Tools like Qualtrics Text iQ or Medallia Text Analytics automate some work with rule-based logic you set up. They are faster than manual coding but need ongoing maintenance and fine-tuning.

- AI-Powered Platforms

Machine learning models can detect topics automatically without needing you to define every synonym up front. These systems learn from context, not just keywords.

Topic Detection That Works in Practice

Good AI does not force you to build a codebook in advance. It reads responses and surfaces meaningful themes. But to be genuinely useful, it needs to follow the MECE principle:

- Mutually Exclusive: Topics should not overlap

- Collectively Exhaustive: Together they should cover the entire content

Some general-purpose models like ChatGPT work well for small datasets (around 50 responses), but they can struggle with statistical rigor at scale (500+ responses).

Most projects end up with 10 to 100 topics. Complex use cases, such as airlines tracking the entire customer journey, might require 300 or more.

Making Sure Quality Control Is Transparent

AI should not be a black box. The best platforms show how well their models perform with metrics like the F1 score, which ranges from 0 to 1. Anything above 0.7 is solid.

One study compared human-only analysis with AI-assisted analysis:

- Human-only: 53

- Human plus AI: 63

Moving from Simple to Topic-Level Sentiment Analysis

Overall sentiment is interesting but not actionable. A 200-word rant labeled “negative” does not tell you what needs fixing. Topic-level sentiment breaks the experience into parts. You see exactly which aspects of the journey need work.

For example, an airline might discover boarding consistently gets positive sentiment while baggage handling is a major pain point. That level of detail is where real improvements start.

Connecting Open-Ended Data to the Rest of Your Insights

The real power comes from combining open-ended feedback with structured data. You can explore which topics matter most to business-class versus economy travelers, or see how sentiment shifts by region.

Driver analysis takes this further by showing exactly how each topic influences key metrics like NPS or CSAT. It gives you a clear roadmap for prioritizing improvements that will move the needle.

The Bottom Line

Open-ended feedback does not have to be a nightmare to analyze. AI tools can handle the heavy lifting by identifying themes, assigning sentiment, and uncovering patterns so you can focus on the meaning behind the results.

The key is choosing the right tool for your needs. Look for platforms that provide clear quality metrics like F1 scores, allow you to customize and refine their analysis, and integrate with your existing data systems.

If you are considering AI for feedback analysis, start small. Most platforms will analyze a sample of your data so you can compare it with your current process. Make sure it truly saves time and effort before you scale up. Put your data to test!

The goal is not to replace human judgment but rather to reduce the manual workload so you can focus on the insights that drive your business forward.

📍MRMW Europe Returns This Autumn!

Excited to hear from Caplena onsite and learn how its team is helping CX pros harness AI to turn open-ended feedback into actionable insights?🎤

You’re not alone. At MRMW EU 2025 (Nov 6–7, Berlin), you’ll hear from Caplena and insights leaders at Scandinavian Tobacco Group, Salomon, Samsung, eBay, EDP and more—sharing real-world case studies, fresh research trends, and the strategies shaping tomorrow’s CX and market insights.

Join us for live discussions, cutting-edge case studies, round tables and interactive networking sessions. The unique MRMW concept provides the perfect balance between personal development, networking and doing business.

Reserve your seat now to connect with industry leaders and shape the future of insights!

by

by